Today’s chatbots aren’t just answering simple questions anymore—they’re booking flights, troubleshooting issues, and connecting to external systems in real time. And as users interact with them across multiple platforms (think web apps, mobile devices, and even third-party tools), one thing becomes clear: behind every seamless experience, there’s a well-orchestrated backend keeping it all running.

But how do you build a chatbot that’s responsive, scalable, and ready to handle thousands of conversations at once—without breaking under pressure?

That’s where event-driven architectures come in.

In this post, we’ll explore a production-ready approach that combines Kafka and Server-Sent Events (SSE) to power real-time communication between your backend and frontend. This design enables your chatbot to:

We’ll break down how these technologies work together, why they’re well-suited for modern chatbots, and how you can implement them to level up your system’s reliability and performance.

Let’s face it—traditional request-response systems weren’t built for the kind of load today’s chatbots have to handle. When you’ve got thousands of users chatting at once, integrations firing off in the background, and messages flying between services, the old model starts to fall apart. It becomes slow, fragile, and tightly coupled.

That’s why event-driven architecture has been such a game-changer.

Instead of waiting around for a response every time something happens, services emit events—little packets of information that other parts of the system can react to when they’re ready. This decouples producers and consumers, making everything more scalable, more resilient, and easier to reason about.

In our setup, Kafka takes the lead as the central nervous system for event distribution. It makes sure that messages flow reliably between services, even under heavy load.

On the frontend side, we use Server-Sent Events (SSE) to keep the UI in sync with the backend in real time. Unlike WebSockets, SSE is simple, lightweight, and works excellent for one-way communication—from server to browser—which is precisely what most chatbot UIs need.

The result? A responsive, scalable chatbot experience that doesn’t break down when things get busy.

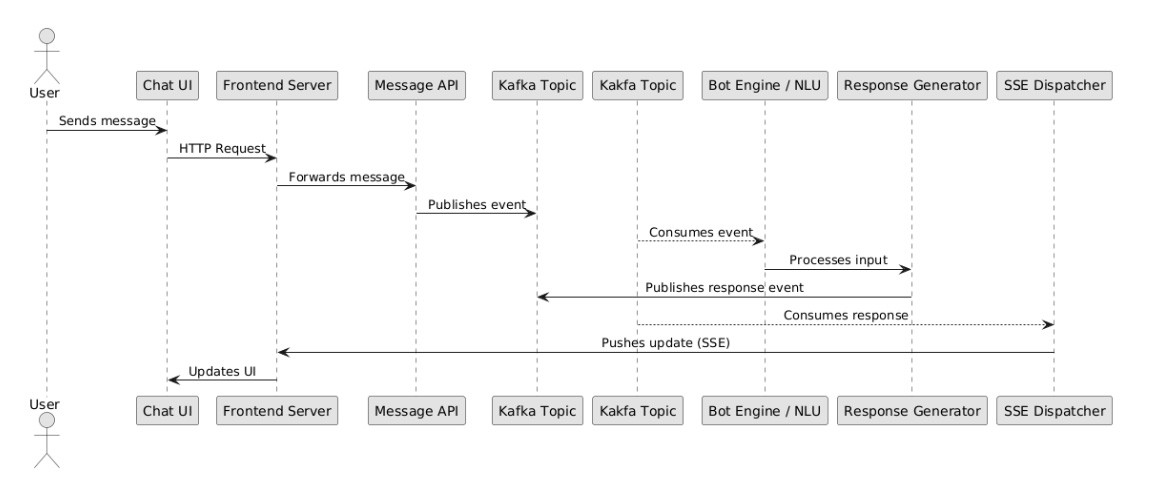

Before we jump into the code, let’s zoom in on the main pieces that make this architecture work. While the whole system may seem like magic from the outside—messages flying in and out, bots replying instantly, UI updating in real time—under the hood, it’s a well-coordinated dance between a few key components.

Let’s break them down:

At the heart of it all is Kafka. Every message your chatbot receives, processes, or sends back goes through it. Think of Kafka as a super-reliable messaging bus that decouples all the moving parts. One service produces a message, another one consumes it—no need for them to know about each other or be online at the same time.

In our case, we typically have two key topics:

Incoming messages: for everything the user sendsBot-responses: for everything the chatbot replies withThis makes it easy to scale services independently and replay or inspect messages when needed.

Here’s where Server-Sent Events (SSE) come in. We use a lightweight SSE dispatcher that listens to bot responses and pushes new messages to the client as they arrive.

No polling. No WebSocket overhead (we’ll talk later about why we didn’t go that route). Just a simple HTTP connection that stays open and streams new events as they happen. It’s reliable, efficient, and perfect for one-way updates—exactly what chatbot replies need.

Now that we understand the architecture and components, let’s see how it comes to life in code. I’ll walk you through a key piece of the puzzle: the service responsible for managing Server-Sent Events connections and pushing updates to users.

1. Managing SSE Connections — ChatEventService

This service manages open SSE connections per user and handles pushing events.

2. Exposing SSE Endpoint — ChatSseController

Expose an HTTP GET endpoint for clients to open the SSE connection.

3. Pushing Chatbot Responses

Anywhere in your backend where a chatbot message is generated, send it to the user like this:

4. Frontend: Listening to SSE Events (React example)

On the frontend, open an SSE connection and listen for updates:

5. Handling Connection Cleanup & Errors

Don’t forget to clean up connections properly on disconnect or errors:

This approach lets you deliver real-time chatbot messages smoothly and reliably, even when the number of users grows. It’s simple, efficient, and gets the job done without adding extra complexity—so your chatbot feels fast and responsive, just like a real conversation should.

When you think about real-time chat, WebSockets are probably the first thing that pops into your mind. They let the client and server talk back and forth constantly, which sounds perfect.

But here’s the thing: our chatbot mainly just needs to send updates to the user, not the other way around. That’s why we went with Server-Sent Events (SSE) instead.

Why?

For what we’re building — fast, reliable chatbot replies — SSE hits the sweet spot. If you want full back-and-forth, like typing indicators or live presence, WebSockets might be the way to go. But for most chatbots, SSE gets the job done cleanly and efficiently.

Building a chatbot that feels natural and responsive is no small feat. But behind the scenes, it all comes down to managing messages effectively — making sure every user gets timely, reliable updates no matter how many people are chatting at once.

By using an event-driven architecture powered by Kafka and Server-Sent Events, you can build a system that scales gracefully and keeps conversations flowing smoothly. SSE lets you push messages in real time without unnecessary complexity, while Kafka handles the heavy lifting of distributing events across your backend services.

This setup gives you a solid foundation to create chatbots that don’t just work — they work well.

If you’re ready to take your chatbot to the next level, following theSolver style, focusing on scalable messaging like this is a must. Your users will notice the difference.